How to use PostgreSQL as a sink (Azure Data Factory)

This is sample of how to use PostgreSQL as a sink.

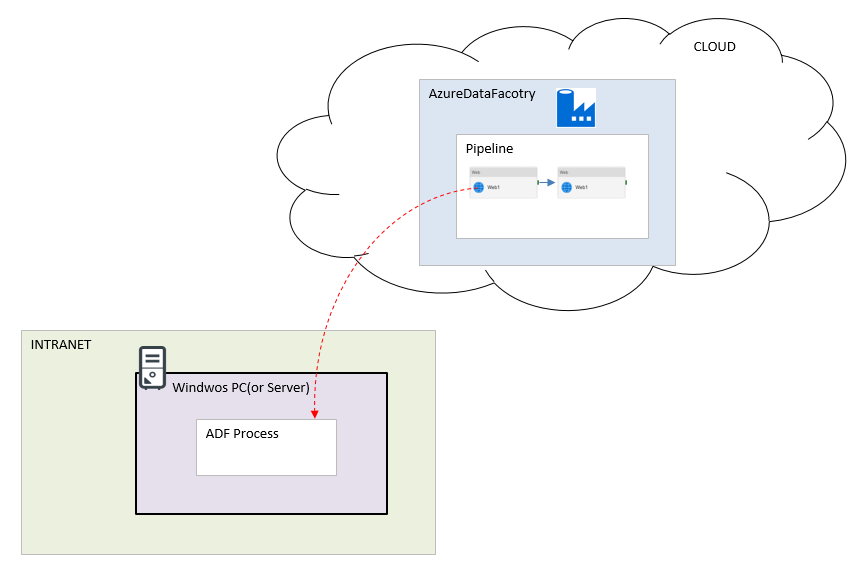

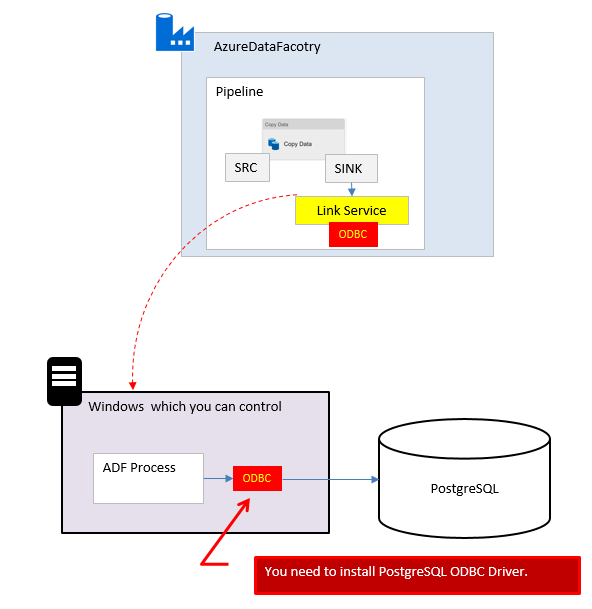

ADF can’t use PostgreSQL as a sink without using Self-hosted integration runtime.

So, at first, you need to set up a self-hosted runtime.

(See What is the Self Hosted Runtime(Azure Data Factory))

There is no PostgreSQL link service which can be used as a sink.

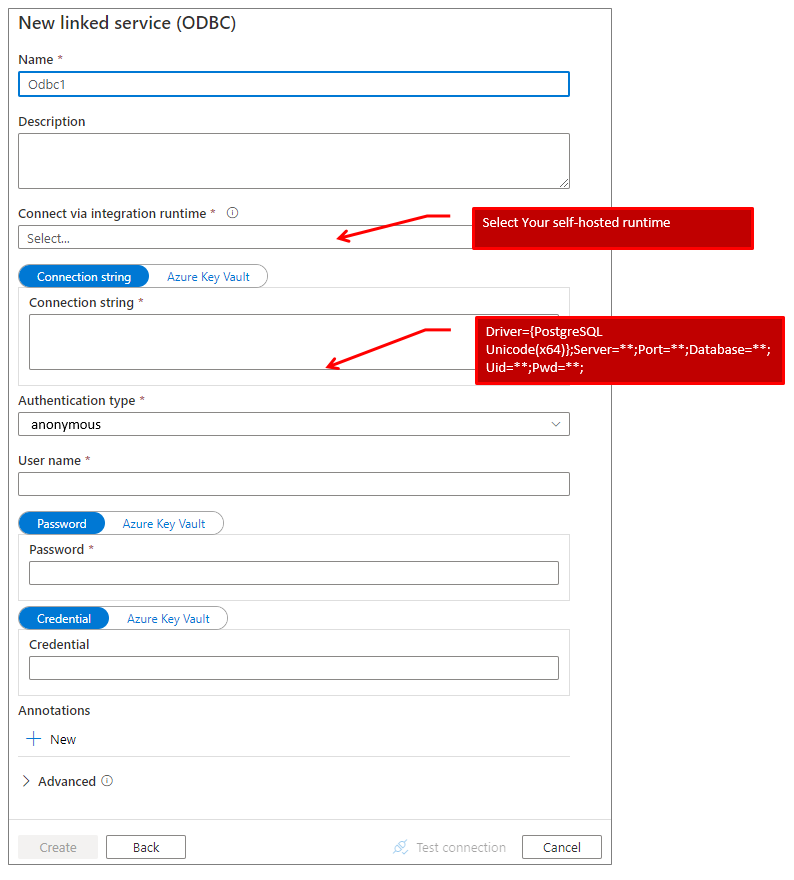

so you need to set “ODBC” link service.

You need to create the environment as below.

■Step1

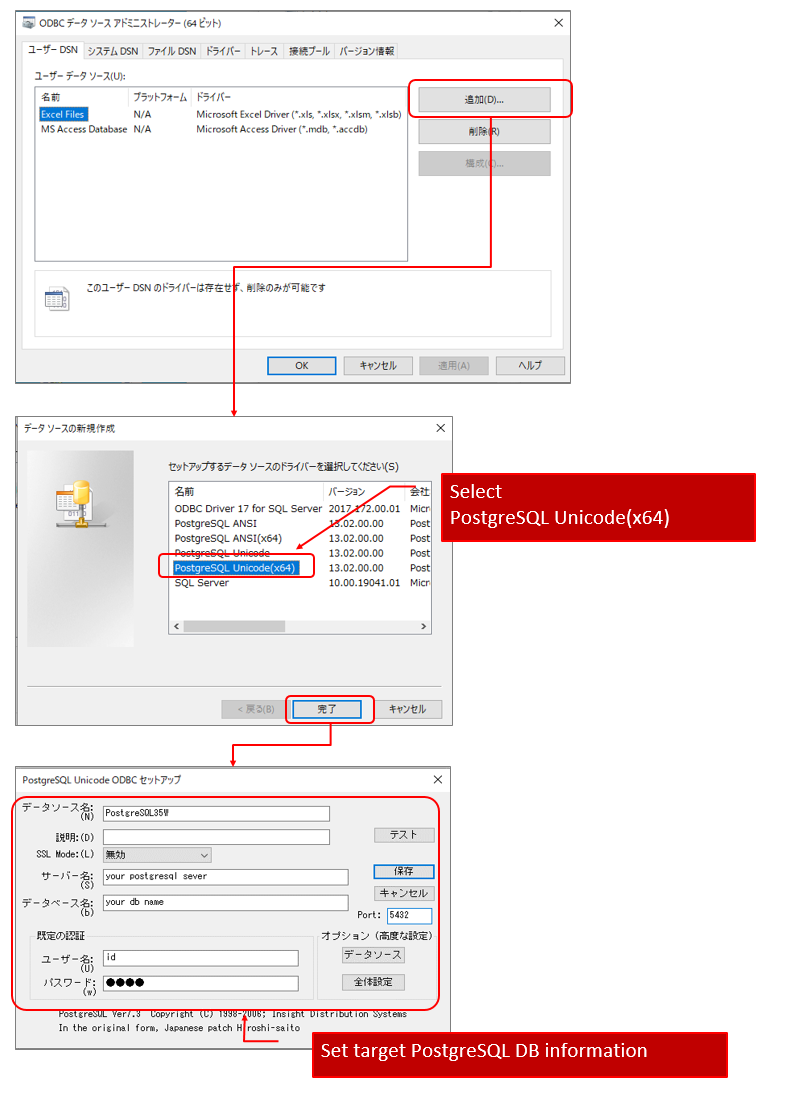

Install PostgreSQL ODBC Driver as follows.

(1)Download PostgreSQL ODBC Driver

(1.1)access https://www.postgresql.org/

(1.2)”Download” -> “File Browser” -> “odbc” -> “versions” -> “msi”

(1.3)Download psqlodbc_13_02_0000-x64.zip (at first, I tried Version 9.2. but it didn’t work)

(2)Install ODBC Driver

■Step2

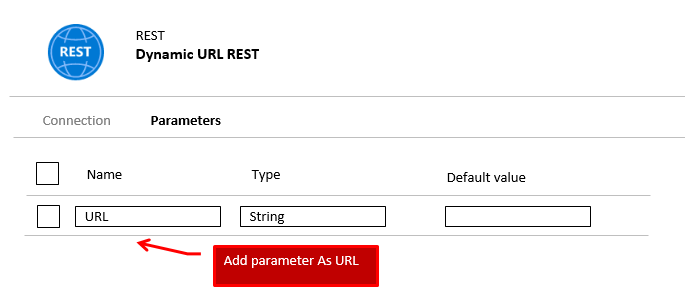

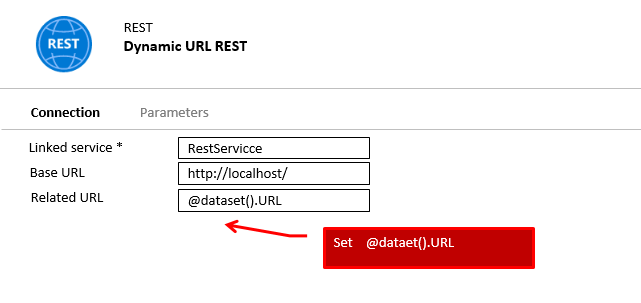

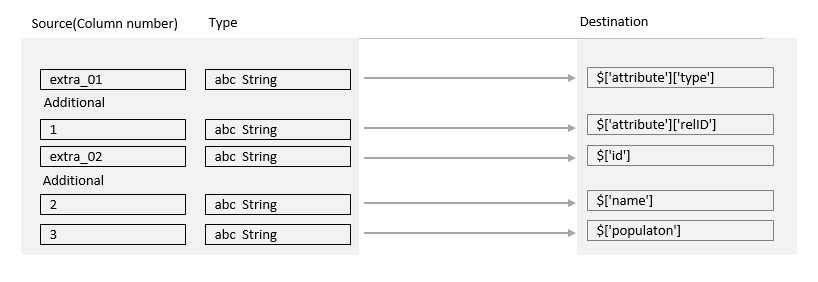

(1)Set sink’s dataset “ODBC”

(2)Set ODBC’s linked service as below.